LLM Ingestion Control and the AI-Native Architecture Shift – Technical SEO 2026

Summary

Technical SEO in 2026 isn’t about speed scores or sitemaps anymore. It’s about how fast AI systems can understand, trust, and cite your expertise. We’re entering the era of ingestion control, where code clarity, response time, and structured proof matter more than traditional SEO tricks.

Ingestion Control & When the Builder Breaks Their Own Foundations

In Mid to late 2025, I watched brighterwebsites.com.au slowly implode, 118 indexed pages out of 160+ dropping to 15 in three months.

No penalty. No algorithm update. Just me, breaking my own technical foundations.

A midnight rebuild and a too-early “fix” for Search Console errors triggered a cascade of redirect and rewrite conflicts, with LiteSpeed, WP Ghost, and my CDN all fighting for control of permalinks.

URLs vanished. Redirects failed. Crawl signals scattered.

To be fair, there was a strategy behind the chaos.

I’d decided to make my structure more semantically relevant for AI citation. It made sense: 60+ blogs lived at brighterwebsites.com.au/my-article-title and most pre-dated 2024.

So I added /blog/ and /projects/ to the URLs, thinking it was a tidy modernisation.

What looked like a “Google delay” turned out to be an ingestion chaos of my own making.

Fixing it was humbling and instructive.

Once I fixed the rewrite handling, rebuilt redirects, and isolated each plugin layer, the indexing stabilised and slowly began to climb again.

The lesson was simple: machines can’t trust what you keep changing.

Meanwhile, Guerrilla Steel’s site, technically imperfect but architecturally clean, kept growing through the same period.

My chaos proved their strength: when your proof layer is structured and your schema unambiguous, AI crawlers filter the noise and keep citing you.

That’s ingestion control in practice.

Not “more pages”. Not bigger sitemaps. Just clarity.

Fewer URLs. Cleaner signals. Faster belief.

Rule #1 for 2026: Don’t optimise for discovery; optimise for confirmation.

If a machine has to guess what your page means, it will believe someone else instead.

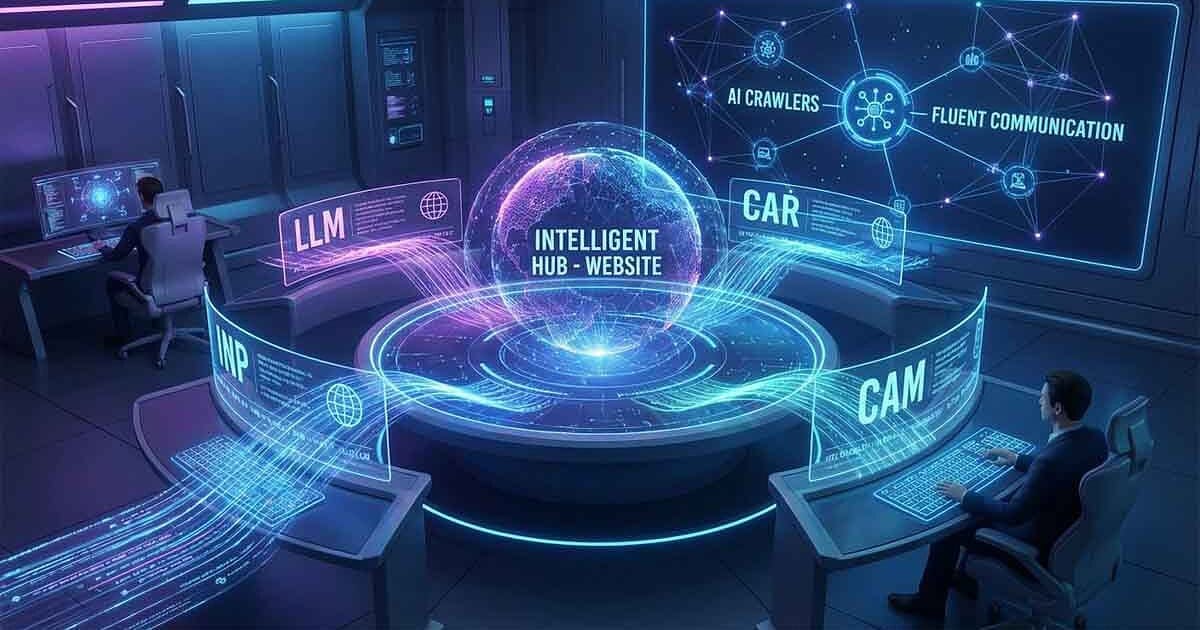

The Content Architecture Record (CAR) and the Content Authority Map are essential tools.

If ingestion control is about clarity, architecture control is about context, how every part of your site teaches machines what connects to what.

In the old world, you mapped URLs.

In 2026, you map meanings.

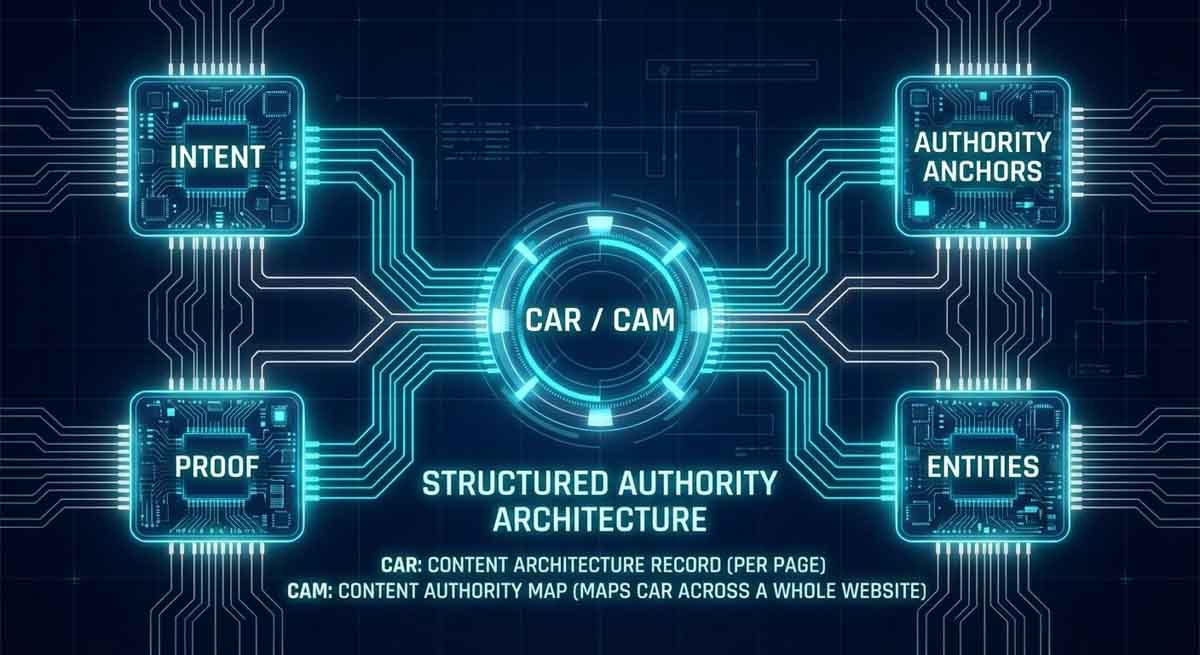

That’s where the idea of CAR (Content Architecture Record) and CAM (Content Architecture Map) comes in, a structured way to show both humans and AI how your ecosystem fits together.

Think of it as the difference between having a sitemap and having a blueprint.

Guerrilla Steel’s strength wasn’t perfect code; it was architectural coherence.

Every product is linked back to a project.

Every project is linked to a testimonial.

Every testimonial referenced the same entity: Guerrilla Steel, Yatala.

This repetition, which spans both the schema and internal structure, became their proof system.

If LLMs.txt is version 1.0, and tells machines what to read, the CAR/CAM approach is the 2027 evolution that teaches them how to understand it.

It builds on the same foundation but adds a metadata layer that documents intent, relationships, proof, and authority anchors right alongside your content.

It’s the difference between being found and being fully understood.

Stay tuned for a future article where I’ll unpack how CAR/CAM works inside the Website-First Blueprint and how it turns technical SEO from a maintenance checklist into a living architecture of trust.

When search engines and AI crawlers ingest your site, they’re not just indexing pages; they’re indexing trust.

The next phase of technical SEO isn’t just about what loads fastest.

It’s about what connects most clearly.

The New Metric: INP and Perceived Speed

By 2026, “site speed” stopped being about how fast a page loads; it’s about how fast it responds.

INP (Interaction to Next Paint) replaced FID as the new usability benchmark. It measures how long it takes a site to visually react after a user (or crawler) interacts with it.

If your JavaScript-heavy layout takes four seconds to hydrate, an AI agent, like Claude or ChatGPT’s search tools, might time out or skip your dynamic content altogether.

So “speed” now lives deeper than load time. It’s about LLM latency, how quick machines can read, interpret, and confirm your authority.

Your stack, hosting, caching, and clean HTML structure are no longer just a hosting and page builder choice. It’s your AI-accessibility layer, defining whether your expertise is even reachable in real time.

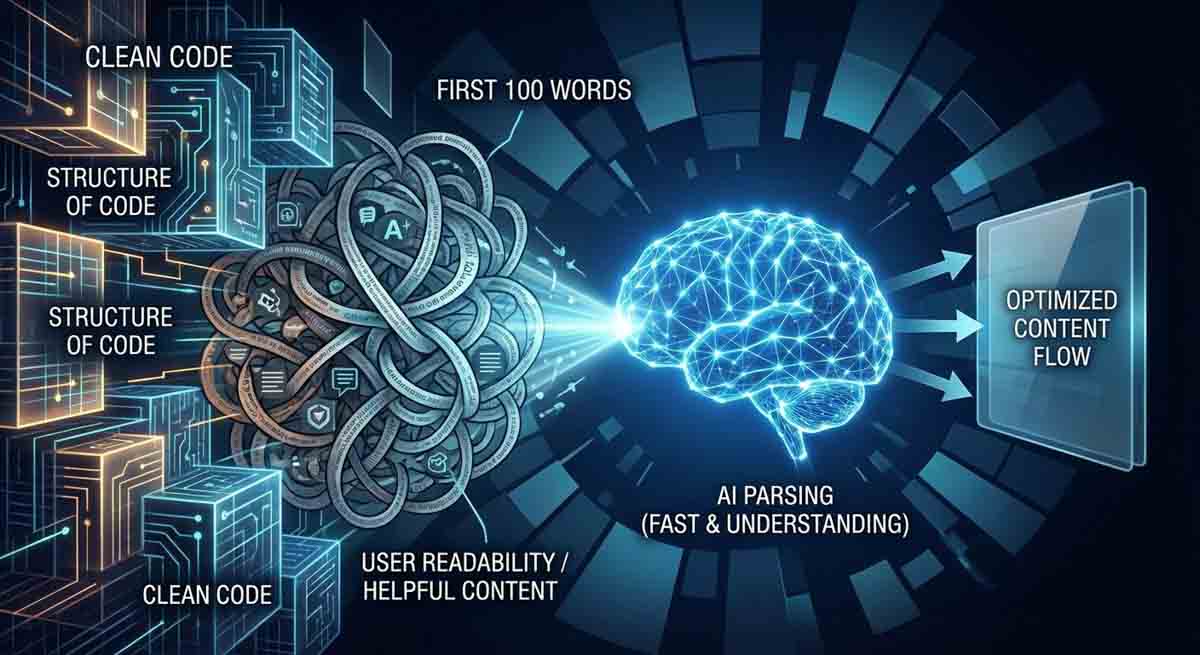

Content Ingestion Optimisation (The First 100 Words)

I heard someone say, “The first 100 words decide everything.”

Then I thought about it. Does that include code? Because if it does, I’m already out of room.

Between the site header, breadcrumbs, post meta, featured image, and everything else that loads before the actual paragraph, I’ve got maybe twenty words left. WTF. Not possible.

And that’s when it clicked.

This isn’t about copywriting for conversion or keyword visibility anymore; it’s about machine comprehension.

And maybe, we’re only just beginning to understand what that really means.

When AI crawlers scan a page, they’re not seeing what we see. They’re reading structure, markup, and meaning buried inside the code.

If your introduction makes them think too hard, you lose the citation.

If it gives them structured confidence, clear entities, context, and intent, you win it.

At Brighter Websites, we’ve started treating meta descriptions and opening paragraphs like structured data in plain English:

Say who the article is for, what it explains, and what it proves.

Then back that up later with schema, metrics, and media that confirm the promise.

That’s the new form of technical optimisation, semantic speed.

The faster a model can confirm your credibility, the faster your authority compounds.

Don’t chase attention in the first 100 words; earn belief.

Flipping the Script, Literally – Avoiding DOM Slop

I mean flipping the script literally like – “flip the code”.

What if we built websites AI-first and styled them for humans last?

How many lines of redundant code do crawlers have to fight through before they reach your actual content?

I did a quick check of my own site, then tidied up a few things I knew I could turn off safely – and still I had 4266 “words” (hard to know with code, I know), but still 40863 characters before my header even rendered! And we haven’t even got to the content yet! Again, WTF~!

Update 30/12/20205 Clearing my <head></head> now down to 28352 characters! with a little clever restructuring and I think we can bring it down even further

Let’s call it DOM Slop, the digital equivalent of messy wiring behind a perfect-looking wall.

We add scripts and features, thinking we’re improving visibility or performance, but some of it might be doing the opposite.

Maybe the balance we need to strike isn’t between speed and beauty; it’s between clarity and comprehension.

If the first 100 words matter, where do they start?

In the <main> tag? The visible text?

Does the order of schema and other code <head> matter more than we think?

Do we really need OG tags for every social platform anymore?

How much “global” CSS is quietly bloating every page that never even uses it?

AI is getting smarter at skipping what it doesn’t need, but in high-competition spaces, even milliseconds and markup order might decide who gets cited first.

The future might not just measure content quality but code discipline.

My prediction? We’ll start optimising code hierarchy, not just visual hierarchy.

Possible “Machine Order of Importance”

<main> - H1

- TLDR

- Author Box

- Content

- Breadcrumbs

- Post Meta

- Comments

- Featured Image

</main> <header> <footer> How humans like to see it

<header> - Breadcrumbs

- H1

- Post Meta

- Featured Image

- TLDR

- Author Box

- Content

<footer> Maybe AI already knows to skip headers and footers.

Or maybe, in a year, it will matter.

Either way, the structure under the surface, not the first 100 words, but whether AI can parse the point of your content in under 200 milliseconds, might end up being what really defines technical SEO in 2026.”

Infrastructure Beats Tactics (The Closing Synthesis)

Every major visibility story in 2025 and 2026 comes back to one thing: infrastructure wins when tactics fail.

Code, site, content, SEO, CRO, performance, copy, messaging, visuals – they all work together and create your infrastructure – for humans and AI.

Guerrilla Steel proved it. When our social algorithms broke, sitemap chaos unfolded, and even Merchant Centre went dark, their visibility still climbed. Not because of luck but because the foundation and authority structure held.

My own site proved the opposite.

Even with expert-level tools, my agency site evolved like a DIY owner-builder project that skipped planning and approvals – architecture cracked, and the signals collapsed.

That contrast is the whole point of the Website-First Blueprint: build the system before the strategy, or every tactic becomes fragile.

Search, social, CRO, and AI – they’re all just distribution layers.

Without a coherent foundation underneath, they magnify inconsistency instead of authority.

Infrastructure isn’t glamorous. It’s the plumbing that makes everything else work, but in a world where AI rewrites the rules monthly, it’s the only layer that survives the reset.

Don’t chase algorithms. Build architectures they can trust.

Because when the next update hits, tactics crumble.

Infrastructure adapts.

And that’s the quiet advantage of those who build for confirmation, not discovery.

Looking Ahead to 2027 (LLMs.txt → CAR/CAM → AI-Native Infrastructure)

If 2025 was the year we realised indexing wasn’t guaranteed, and 2026 became the year ingestion control defined visibility,

Then 2027 is shaping up to be the year of AI-native infrastructure, where content, code, and context finally merge.

LLMs.txt was version 1.0, a translator between your website and the machines trying to understand it.

It told AI what mattered and what didn’t. It was a start.

But it didn’t explain relationships.

It didn’t define intent.

It didn’t prove authority.

That’s where CAR/CAM comes in, the evolution beyond index files.

Think of it as a blueprint that documents not just the pages, but the reasoning behind them:

what connects to what, why it exists, and what it’s meant to prove.

A system like this could form the foundation of AI-native infrastructure: websites built to communicate meaning first, style second.

Where every line of code, schema tag, and internal link reinforces a single message:

“This source can be trusted.”

Because in a world where algorithms rewrite themselves weekly,

Clarity is the only constant.

2027 Prediction: The sites that win won’t be the prettiest or the loudest; they’ll be the ones that make their authority undeniable to both humans and machines.

Stay tuned; the next piece will break down exactly how CAR/CAM integrates into the Website-First Blueprint and how the next generation of regional businesses can build AI-ready authority from the ground up.

The 2026 Technical SEO Checklist & a few New Terms defined

All this talk of ingestion control and infrastructure might sound abstract until you look at what it means in practice.

So I wrote my own “2026 Technical SEO Checklist” a practical list of what’s really changed, what still matters, and which “best practices” have quietly expired.

And no, it’s not just a box-ticking exercise for meta tags or heading hierarchy.

It’s my new baseline for AI-native, authority-first optimisation, built from the same frameworks I use internally across every Brighter Websites project.

There are a few “New Terms” or at least they are less commonly talked about in this article – so here are some answers to questions you might have if you are just starting to explore what LLM Ingestion is all about.

What is Ingestion Control in SEO?

Ingestion control is about helping search engines and AI systems understand your content clearly. Instead of chasing more indexed pages or bigger sitemaps, it focuses on building clean, consistent signals, so machines can confirm what your site means, not just find it.

What are CAR and CAM in technical SEO?

CAR (Content Architecture Record) and CAM (Content Authority Map) are emerging concepts that go beyond traditional sitemaps. They document how your content connects, what each section proves, and which entities or data back it up, helping AI systems understand context and authority, not just URLs.

What is Page Speed INP and why does it matter?

INP (Interaction to Next Paint) measures how quickly a webpage responds to user or crawler interaction. It replaced FID as the key usability metric. In 2026 and beyond, fast INP means your site is responsive for humans and machine crawlers alike, reducing latency and improving how quickly AI systems can parse your expertise.

What does “AI-Accessibility Layer” mean?

Your AI-Accessibility Layer is your technical stack, servers, caching, and clean HTML, that determines whether AI crawlers can easily access and interpret your site. Fast, clear, and semantically structured sites are more “AI-readable,” improving citation and inclusion in AI search tools.

What is meant by ‘Infrastructure Beats Tactics’?

It means long-term visibility comes from solid technical and architectural foundations, not short-term SEO tricks. When your structure, schema, and internal linking are coherent, updates or algorithm changes have less impact, your site remains stable and trustworthy to both humans and machines.